Browse Source

fix(doc): add tutorial for tfLite plugin

Signed-off-by: Jianxiang Ran <rxan_embedded@163.com>

17 changed files with 225 additions and 1 deletions

+ 162

- 0

docs/en_US/guide/ai/tensorflow_lite.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

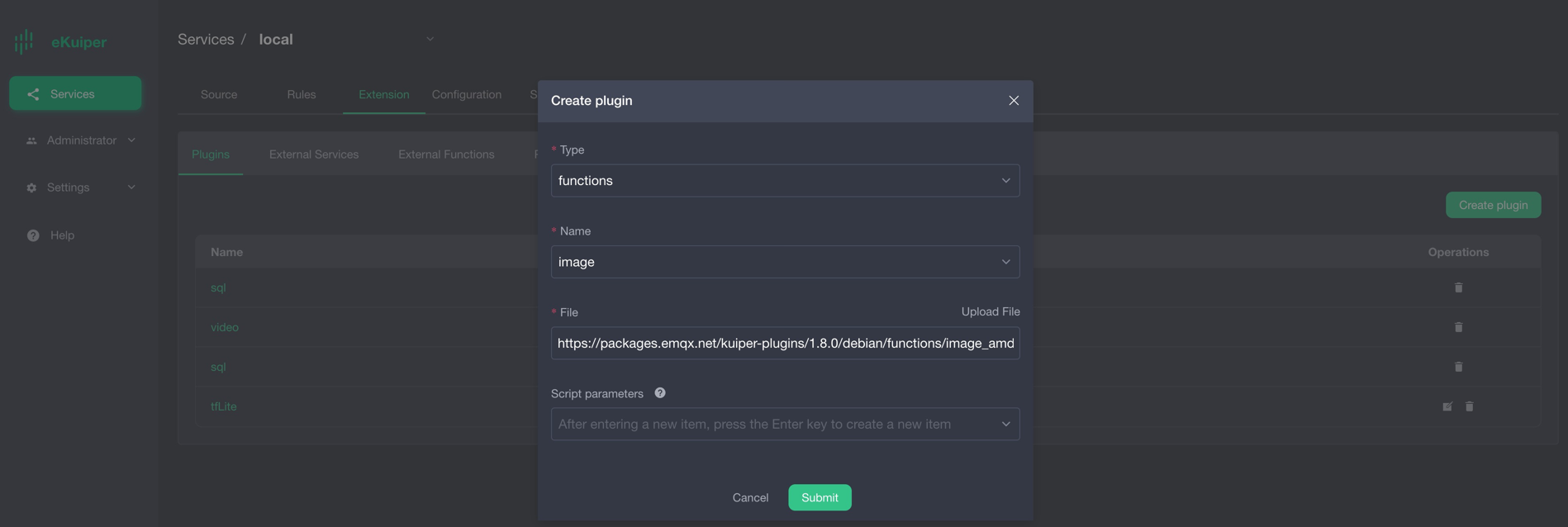

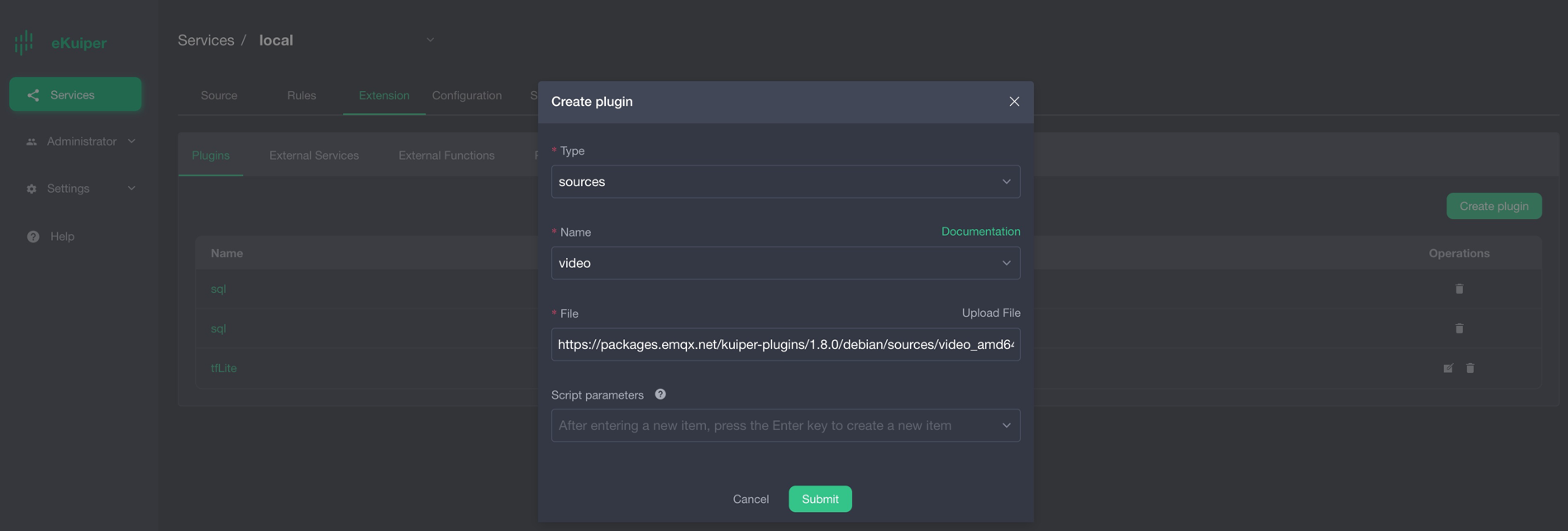

docs/en_US/resources/image_install.png

BIN

docs/en_US/resources/image_register.png

BIN

docs/en_US/resources/mobilenet_upload.png

BIN

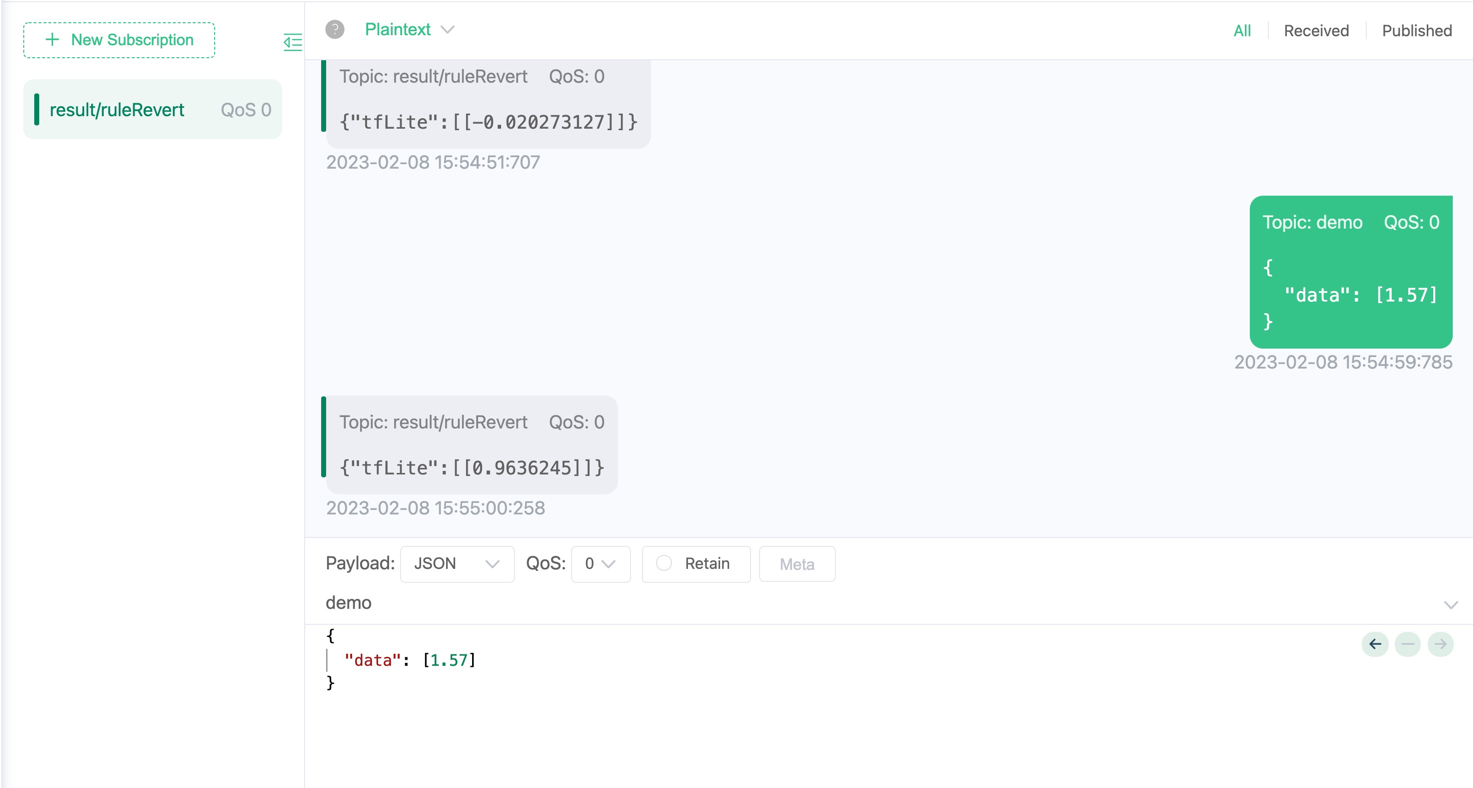

docs/en_US/resources/mqttx_mobilenet.png

BIN

docs/en_US/resources/mqttx_sin.png

BIN

docs/en_US/resources/sin_upload.png

BIN

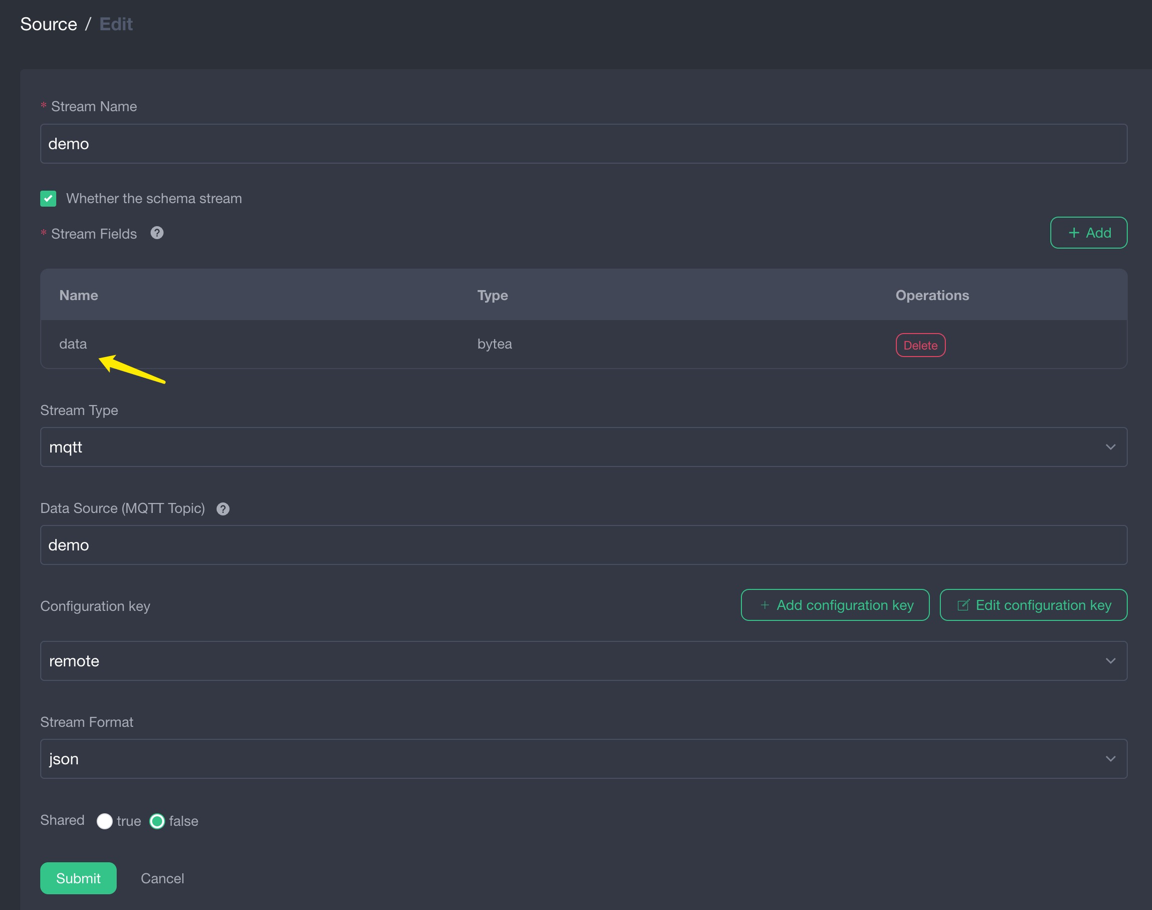

docs/en_US/resources/stream_byte.png

BIN

docs/en_US/resources/tflite_image_result.png

BIN

docs/en_US/resources/tflite_install.png

BIN

docs/en_US/resources/tflite_register.png

BIN

docs/en_US/resources/tflite_resize_rule.png

BIN

docs/en_US/resources/tflite_sin_rule.png

BIN

docs/en_US/resources/video_config.png

BIN

docs/en_US/resources/video_install.png

+ 63

- 1

docs/zh_CN/guide/ai/tensorflow_lite.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

docs/zh_CN/resources/tflite_image_result.png