Explorar o código

0.0.2 version

Modificáronse 86 ficheiros con 12912 adicións e 1128 borrados

+ 7

- 0

.gitignore

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 43

- 0

Makefile

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 46

- 4

README.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 160

- 0

common/data.go

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 343

- 0

common/time_util.go

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 164

- 31

common/util.go

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 10

- 0

docs/cli/overview.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

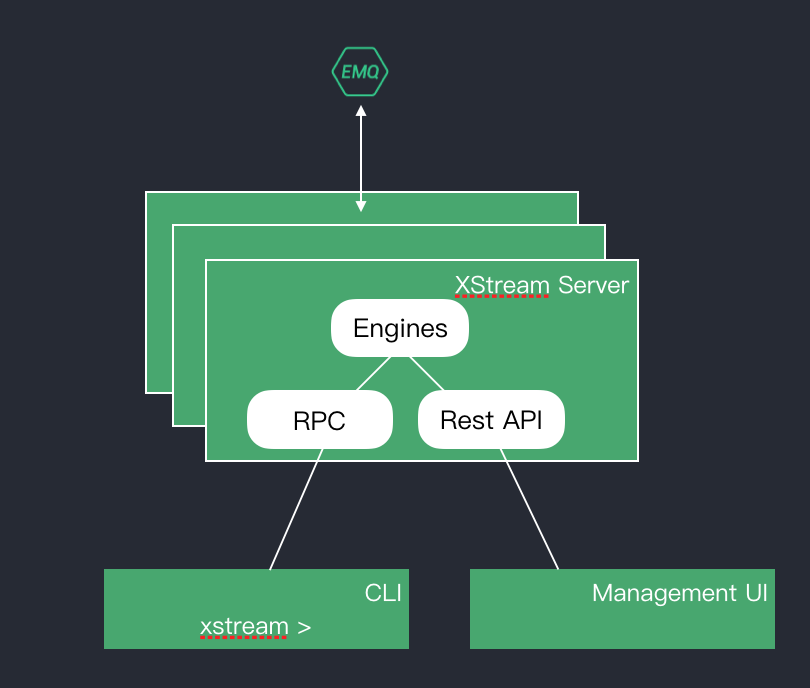

BIN=BIN

docs/cli/resources/arch.png

+ 171

- 0

docs/cli/rules.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 118

- 0

docs/cli/streams.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 14

- 0

docs/extension/overview.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 151

- 0

docs/getting_started.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 12

- 0

docs/index.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 11

- 0

docs/operation/configuration_file.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 20

- 0

docs/operation/install/cent-os.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 47

- 0

docs/operation/install/overview.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 12

- 0

docs/operation/operations.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 6

- 0

docs/operation/overview.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 58

- 0

docs/rules/overview.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 6

- 0

docs/rules/sinks/logs.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 10

- 0

docs/rules/sinks/mqtt.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 49

- 0

docs/rules/sources/mqtt.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 93

- 0

docs/sqls/built-in_functions.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 29

- 0

docs/sqls/data_types.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 162

- 0

docs/sqls/json_expr.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 8

- 0

docs/sqls/overview.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 307

- 0

docs/sqls/query_language_elements.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN=BIN

docs/sqls/resources/hoppingWindow.png

BIN=BIN

docs/sqls/resources/sessionWindow.png

BIN=BIN

docs/sqls/resources/slidingWindow.png

BIN=BIN

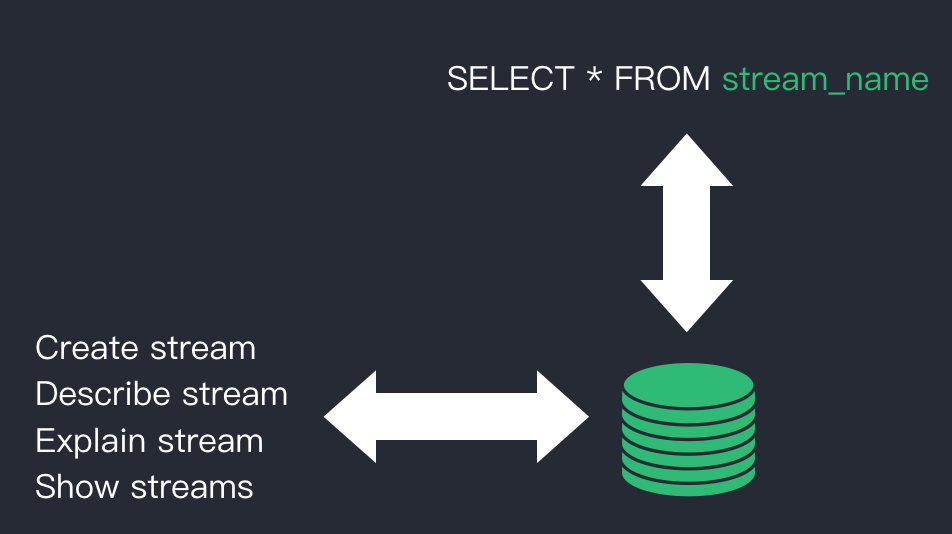

docs/sqls/resources/stream_storage.png

BIN=BIN

docs/sqls/resources/tumblingWindow.png

+ 87

- 0

docs/sqls/streams.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 97

- 0

docs/sqls/windows.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 3

- 0

etc/client.yaml

|

||

|

||

|

||

|

||

+ 12

- 0

etc/mqtt_source.yaml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 4

- 0

etc/xstream.yaml

|

||

|

||

|

||

|

||

|

||

+ 17

- 0

go.mod

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 442

- 160

xsql/ast.go

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 42

- 0

xsql/ast_agg_stmt_test.go

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 188

- 0

xsql/funcs_aggregate.go

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 318

- 0

xsql/funcs_ast_validator.go

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 404

- 0

xsql/funcs_ast_validator_test.go

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|