README.md 18 KB

Overview

eKuiper FVT (functional verification tests) covers following scenarios.

- Basic functions of HTTP REST-API

- Basic functions of CLI

- Complex end-2-end scenario for eKuiper source, processing and sink

The scenarios will be invoked automatically in Github actions with any new code commit or push request. Another Raspberry Pi continouly integration environment will also be ready for running test cases in ARM environment. So if receives any failed FVT running, please re-check the code or update the scripts if necessary.

eKuiper project uses JMeter for writing the scripts for following reasons,

- Easy to write testcases for HTTP REST-API & CLI

- Capabilities of publish and subscribe MQTT message. The eKuiper end-2-end scenarios requires MQTT client for pub/sub message, and JMeter provides a unified approach for supporting such functions

- Capabilities of writing complex content assertions. Besides some simple ways of content assertions, JMeter also provides

BeanShell Assertion, which can be used for extract and process complex message contents.

Run script in local development environment

Prepare JMeter

eKuiper uses JMeter for FVT test scenarios, includes REST-API, CLI and end to end test scenarios.

- Install JRE - requires JRE 8+

- Download and extract JMeter.

Install MQTT broker

Because test scripts uses MQTT broker for source and sink of eKuiper rule, an MQTT broker is required for running the scripts. If you use a broker that cannot be accessed from tcp://127.0.0.1:1883, you should modify the script and specify your MQTT broker address.

- Modify servers to your MQTT broker address in eKuiper configuration file

etc/mqtt_source.yaml. Modify the script file that you want to run.

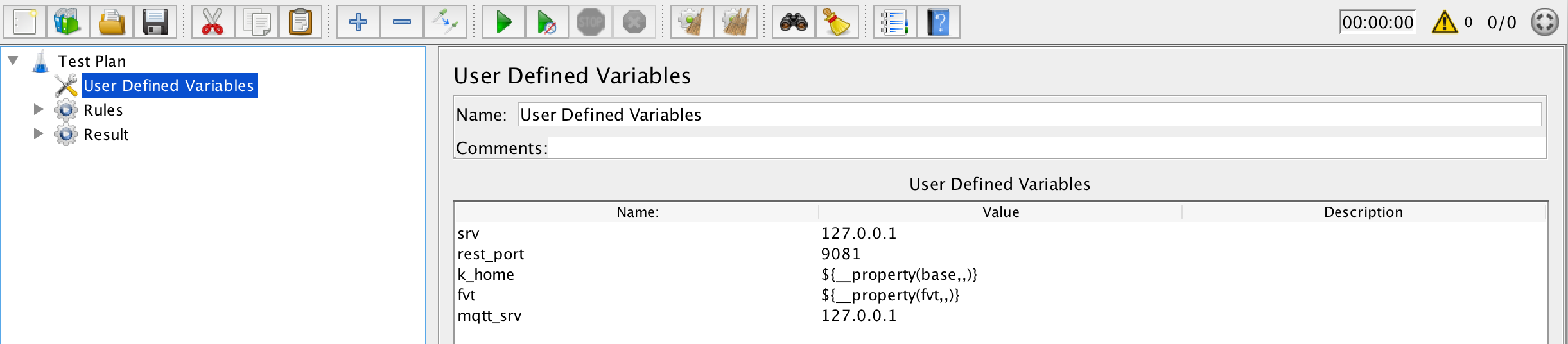

mqtt_srv: The default value is127.0.0.1, you need to update it if you have a different broker. Refer to below screenshot,Test Plan > User Defined Variables > mqtt_srv.

- If you run test eKuiper server at another address or port, you need also change below two config.

Test Plan > User Defined Variables > srv: The eKuiper server address, by default is at127.0.0.1.Test Plan > User Defined Variables > rest_port: The eKuiper server RestAPI port, by default is9081, please change it if running eKuiper at a different port.

Run JMeter

For most of scripts, you can just start JMeter by default way, such as bin/jmeter.sh in Mac or Linux. But some of scripts need to pass some parameters before running them. Please refer to below for detailed. Please make sure you start MQTT broker & eKuiper before running the tests.

Scenarios

The script tests the basic steps for stream operations, include both API & CLI.

- Create/Delete/Describe/Show stream for RestAPI

- Create/Delete/Describe/Show stream for CLI

The script need to be told about the location of eKuiper install directory, so script knows where to invoke eKuiper CLI.

Specify the

baseproperty in the JMeter command line, thebaseis where eKuiper installs. Below is command for starting JMeter.bin/jmeter.sh -Dbase="/opt/kuiper"

The script tests stream and rule operations.

- Create a stream with MQTT source, and then call rule management

- Create/Delete/Describe/Show rule for RestAPI

- Create/Delete/Describe/Show rule for CLI

- Delete stream definition at the last step of test scenario

The script need to be told about the location of eKuiper install directory, so script knows where to invoke eKuiper CLI.

- Specify the

baseproperty in the JMeter command line, thebaseis where eKuiper installs. Specify the

fvtproperty in the JMeter command line, thefvtis where you develop eKuiper, script will read rule filetest/rule1.txtfrom the location.Modify

mqtt.serverto your MQTT broker address in filetest/rule1.txt.So below is command for starting JMeter.

bin/jmeter.sh -Dbase="/opt/kuiper" -Dfvt="/Users/rockyjin/Downloads/workspace/edge/src/ekuiper"

The scenario tests a rule that select all of records from a stream.

- Stream source is MQTT, and JSON data are sent to an MQTT topic by JMeter. The sent data are read from file

iot_data.txt, where the 1st column isdevice_id, the 2nd column istemperature, the 3rd column ishumidity. There are totally 10 records in the file. - The processing SQL is

SELECT * FROM demo, so all of data will be processed and sent to sinks. - There are two sinks for the rule, one is log, and another is MQTT sink. So result will be sent to those sinks.

Another JMeter mock-up user subscribes MQTT result topic. JMeter validates message number and content sent by the rule. If the record cotent is not correct then JMeter response assertion will be failed. If record number is not correct, the script will not be stopped, until CI (continuous integration) pipeline kills it with timeout settings. If you run the script in local, you'll have to stop the test manually.

This scenario test is very similar to the last one, except the rule filters the record with a condition.

- The processing SQL is

SELECT * FROM demo WHERE temperature > 30, so all of the data that with temperature less than 30 will be fitered. The script read data from fileiot_data.txt, totally 10 records. Another JMeter mock-up user subscribes MQTT result topic, and expected result are saved in file

select_condition_iot_data.txt. If the record cotent is not correct then JMeter response assertion will be failed. If record number is not correct, the script will not be stopped, until CI (continuous integration) pipeline kills it with timeout settings. If you run the script in local, you'll have to stop the test manually.

The script automated steps described in this blog, except for the sink target changes to local EMQ broker (not AWS IoT Hub).

The processing SQL is as following.

SELECT avg(temperature) AS t_av, max(temperature) AS t_max, min(temperature) AS t_min, COUNT(*) As t_count, split_value(mqtt(topic), "/", 1) AS device_id FROM demo GROUP BY device_id, TUMBLINGWINDOW(ss, 5)Another JMeter mock-up user subscribes MQTT result topic, and it waits for 15 seconds to get all of analysis result arrays. With the beanshell assertion, it calculates total number of

t_countfor device 1 & 2. If the number is not correct, then it fails.

This script creates stream and rule, then get metrics of rule, and assert message number processed in stream processing line. Additionally, script will stop, start or restart the rule, and verify the metric value of rule.

Another JMeter mock-up user subscribes MQTT result topic, and assert message number and contents.

The script tests scenarios for following cases,

- Use the SQL to select a field that is not existed in stream definition, it returns

[{}]. - stream definition changed, and rule works well after restarting it.

Another JMeter mock-up user subscribes MQTT result topic, and assert message number and contents.

-

- The script adds

ORDER BYstatement based onAggregation rule.

SELECT temperature, humidity, split_value(mqtt(topic), "/", 1) AS device_id FROM demo GROUP BY TUMBLINGWINDOW(ss, 10) ORDER BY device_id DESC, temperature- Another JMeter mock-up user subscribes MQTT result topic, and assert the order for device_id field is descending, and temperature is ascending.

- The script adds

The test script is used for testing eKuiper EdgeX source. To run the script,

An EdgeX message bus publish tool should be compiled and run during running test.

# go build -o test/edgex/pub test/edgex/pub.goRun the JMeter with following command, and specify the

fvtproperty in the JMeter command line, thefvtis where you develop eKuiper, script will searchtest/edgex/pubfrom the location.bin/jmeter.sh -Dfvt="/Users/rockyjin/Downloads/workspace/edge/src/ekuiper"The processing SQL is

SELECT * FROM demo WHERE temperature > 30, so all of the data that with temperature less than 30 will be fitered.Another JMeter mock-up user subscribes MQTT result topic, and assert message number and contents.

The test script is used for testing specifying another EdgeX source configurations in eKuiper.

- In the

edgex.yamlconfiguration file, below additional configurations are specified.

application_conf: #Conf_key

protocol: tcp

server: localhost

port: 5571

topic: application

- In the create stream statement, test script uses

CONF_KEYkeyword to use overrided configuration value that specified inedgex.yaml.

CREATE STREAM application () WITH (FORMAT="JSON", TYPE="edgex", CONF_KEY = "application_conf")

As same steps that required in the

select_edgex_condition_rule.jmx, EdgeX value descriptor service & message bus publish tool should be ready.

The test script verifies EdgeX message bus sink. Only one message meet the condition of created rule, and it will be sent to EdgeX message bus sink.

As with the previous 2 testcases, besides to prepare pub application, another sub application should also be prepared.

# go build -o test/edgex/sub/sub test/edgex/sub/sub.go

The test script verifies EdgeX array data type support. The rule uses JSON expression in both SELECT and WHERE clause. The sink result is sent to MQTT broker, and it verifies the project result in sampler assertions.

This test script, you need to prepare pub application.

The script is an end-2-end plugin test. It requires a mock http server, and also a plugin.

```shell

# go build -o test/plugins/service/http_server test/plugins/service/server.go

```

The script is an end-2-end portable plugin test. It requires a mock http server, and also a plugin which will be built in the prepare_plugin.sh.

```shell

# go build -o test/plugins/service/http_server test/plugins/service/server.go

```

The script has two parts to test go and python sdk respectively. It covers the portable plugin CRUD operations and the running of the source, function and sink that are defined in that plugin.

The test script verifies HTTP pull source. It sends request to a server. The script set incremental to true, so it will compare with last result; If response of two requests are the same, then will skip sending out the result. This script also requires to run server, please refer to last testcase for how to compile and run.

This script verifies the binary data support. The rule consumes the binary image data sent from MQTT broker, and then processed by the rule, finally the image data will be sent back to MQTT broker again. The script verifies BASE64 of image that produced by eKuiper rule engine.

binary_image_hex.txt: the data file of image.binary_image_base64.txt: the BASE64 file of image.

This script verifies the batch table support.

- Create a MQTT stream. The sent data are read from file

iot_data_id.txtwhich defines an id, temperature and humidity. - Create a table whose type is file which reads

lookup.jsonas the content. It is like a dictionary which maps the name and size to each id. - The rule join the stream and table by id to lookup the name and filter by temperature.

The result data will be sent back to MQTT broker again and compared with

table_static_result_data.txt.

This script verifies the continuous updated table support. Unlike the static table, the continuous table will be updated once received new data.

- Create a MQTT stream with topic named

iot. The sent data are read from fileiot_data_multi_topics.txtwhen the topic name is matched which defines temperature and humidity. - Create a table whose type is mqtt with topic name

state. The sent data is also from fileiot_data_multi_topics.txtwhen the topic name is matched which defines the state. The table records the latest state from thestatetopic which is working like a control topic to switch on or off of the processing. - The rule join the stream and table filter by both the state from the table and temperature from the stream.

The result data will be sent back to MQTT broker again and compared with

table_cont_result_data.txt.

This script verifies the shared source instances across rules. By specifying shared property to true in the stream definition, all rules using the stream will all share the same source instance for better performance.

- Create a MQTT stream with

SHAREDoption true. The sent data are read from fileiot_data.txtwhich defines temperature and humidity. - Create 3 rules which all select from the stream just created with different where filter. They should share the same mqtt source instance.

- Send data to the stream. Make sure all 3 rules receive the exact same copy of data but conduct different filter by checking the result.

The result data will be sent back to MQTT broker again and compared with

select_condition_iot_data.txt,select_condition_iot_data2.txtandselect_condition_iot_data3.txtrespectively.

The test script is used for testing eKuiper EdgeX connection selector. To run the script,

Redis Server need installed since EdgeX are using Redis as message bus

- modify the

etc/connections/connection.yaml, make sure the server and port is correctyaml edgex: redisMsgBus: #connection key protocol: redis server: 127.0.0.1 port: 6379 type: redis - modify the jmeter script, make sure the

redis_srvis set to the right redis address

- modify the

An EdgeX message bus publish/subscribe tool should be compiled and run during running test.

# go build -o test/edgex/pub test/edgex/pub.go

# go build -o test/edgex/sub test/edgex/sub.go

- Run the JMeter with following command, and specify the

fvtproperty in the JMeter command line, thefvtis where you develop eKuiper, script will searchtest/edgex/pubandtest/edgex/subfrom the location.

bin/jmeter.sh -Dfvt="/Users/rockyjin/Downloads/workspace/edge/src/ekuiper"

The Jmeter script will direct eKuiper to subscribe data from EdgeX redis message bus according to the connection info from

etc/connections/connection.yamland sink the result to EdgeX redis message bus using the same connection info Jmeter usepub toolto generate data,sub toolto get the processed result and assert message number and contents

The test script is used for testing Redis KV Storage. To run the script,

Redis Server need installed since eKuiper will use Redis as kv storage

- modify the

etc/kuiper.yaml, make sure type is set toredis, and server, port and password is correctyaml store: #Type of store that will be used for keeping state of the application type: redis redis: host: localhost port: 6379 password: kuiper #Timeout in ms timeout: 1000 sqlite: #Sqlite file name, if left empty name of db will be sqliteKV.db name: - modify the jmeter script, make sure the

redis_srvis set to the right redis address

- modify the

A redis client tool should be compiled and run during running test.

# go build -o test/redis/set test/redis/set.go

- Run the JMeter with following command, and specify the

fvtproperty in the JMeter command line, thefvtis where you develop eKuiper, script will searchtest/redis/setfrom the location.

bin/jmeter.sh -Dfvt="/Users/rockyjin/Downloads/workspace/edge/src/ekuiper"

The Jmeter script will create streams and rules by rest api, all metadata will be stored in redis. Jmeter use

redis client toolto get the configuration directly from redis and compare it with the rest api created ones

This script verifies the memory source/sink to form a rule pipeline of multiple rules and the usage of dynamic properties in the mqtt sink to publish the result to multiple topics. This test also verifies event time window to guarantee the result is constant.

- Create two mqtt streams device1 and device2 to simulate two sensors. The data is read from

iot_data_ts.txtwhich contains timestamp. - Create a memory stream to store the convergence of filtered data from the previous two sensors. The stream subscribe to a wildcard memory topic to receive the data from multiple memory sinks.

- Create two rules against the two mqtt streams to do some filtering and sink the result to memory sink topic.

- Create the final rule against the memory stream and calculate the average temperature for each device with group by. The result then sink to dynamic mqtt topic by using the dynamic prop.